Your complimentary articles

You’ve read one of your four complimentary articles for this month.

You can read four articles free per month. To have complete access to the thousands of philosophy articles on this site, please

Articles

Ethics of the Future

Alexander Joy on the importance of not hurting future people.

We face a unique problem when we attempt to formulate an ethical system in which future generations are taken into account: the subjects of our attention do not exist. Of course, we assume that future generations will exist, barring an apocalypse of some kind, like some unexpected human extinction. Here I would like to sketch some of the difficulties involved in articulating an ethics that incorporates not-yet-existent subjects. First there is the question of whether we ought to factor future generations into our ethical decision-making at all. If we do decide that we should think about future people when evaluating our actions, there’s then the question of which kind of ethical system we should use to do so. Third, which species of our chosen ethical system should we adopt? While I’ll attempt to provide answers for each of these questions, my overall intent is to open a discussion about the problems associated with a future-conscious ethics.

Should Ethics Care About The Future?

Before we can worry over how to formulate our ethics of the future, we must determine whether the future even has a place in our ethical discussions. Must we take future people into account when judging the rightness or wrongness of our actions?

In some ways it seems a bit absurd to be concerned about people who don’t exist. For example, we don’t fret about what deforestation will do to the local (non-existent) hobbit population. Because hobbits do not exist, nothing we do would affect them. Yet it seems to me that there’s a crucial difference between a non-existent hobbit and a not-yet-existent human being: the former will never exist, whereas the latter (probably) will. As such, future human beings belong to a special class of not-existent entities. We could call this class ‘pending subjects’, and define them roughly as a group of people who would deserve ethical consideration if they existed, and in all likelihood will exist someday.

Now that we’re free from absurdity, let’s move on to deciding whether we should think about these pending subjects when ethically evaluating our actions. I suggest that we must take future subjects into account regardless of the ethical system we use. Here I will distinguish two main categories of ethical systems: deontology and consequentialism. A deontological system is any ethical system which believes that actions can be inherently right or wrong regardless of their consequences. For example, a deontologist might suppose that because we have a duty to always tell the truth, lying is never permissible, even in extreme circumstances. A consequentialist system, on the other hand, is a system in which the consequences of one’s actions are the main concern for ethical evaluation, taking precedence over either the actions themselves or the intentions for performing them. A consequentialist thinker might believe even murder to be appropriate if it would save many other lives. Some philosophers believe that these two types of ethical systems could be reconciled into a single way of thinking. For my purposes, I only wish to highlight that they can be thought of as two possible ways of doing ethics. Next I wish to demonstrate that both of these ways are inherently concerned with future subjects.

How is a deontological system concerned for the future? It’s because in effect it doesn’t care whether the object of your actions is there or not. A deontological ethical system could condemn murder as wrong per se : therefore, murder is wrong regardless of whom one murders – it could be Hitler, it could be your neighbor, it could be a teenager who would have helped with your yard-work thirty years down the road and who has not yet been conceived. The point is that if the action is already wrong in and of itself, then the subject that experiences the action (the luckless victim) is not really a part of the ethical evaluation. So in a deontological system, it does not matter whether the subject exists already or is yet to exist. They are treated with equal concern (or lack of concern). As such, deontological systems implicitly factor in future people, since they’re regarded in the same manner as current people.

A consequentialist system also includes future people in its considerations, because consequentialism is always concerned with the future. It evaluates whether or not an action is ethical based upon its ramifications – by definition, what effect the action will have in the future. And since the future is already part of consequentialist thinking, it’s not much of a stretch for future people to receive ethical consideration within this framework. For instance, the short-term consequences of emptying a barrel of hazardous chemicals into the water supply will be far-reaching: it will kill lots of wildlife and contaminate the town’s drinking water. But when I look into the deeper future, I realize that the contamination may cause birth defects, which would harm those who have yet to be born. A system that makes ethical judgments based on consequences will be preoccupied with the future, and so with the subjects who will populate it.

Now, if we’re obligated to care about the future no matter which ethical approach we favor, we’re left with another important question. Which of the two types of ethical systems is better suited for dealing with future people? Sure, they both suggest that we should treat future people ethically. But does one offer a better prescription for doing so?

I’m inclined to believe that a consequentialist system can take account of future people more effectively than a deontological one. This is because I can devise no deontological formulation for treating future people ethically that does not itself have a flavor of consequentialism. For example, if we say that we should preserve the planet, we’ll likely say that this is for the sake of future generations: we have a duty to them in our role as the current stewards of the Earth (and an ethics of pure duty is a deontological ethic). Yet, if we unpack this a bit, we see that there is a consequentialist undertone to it. Basically, we’re saying, “We must leave future generations with an intact planet, because if we don’t, they’ll suffer badly.” Our duty is thus defined in terms of consequences. So I’m going to confine myself to looking at consequentialist methods of evaluation.

Utilitarianism, Painism, & Future Subjects

If we’re going to use a consequentialist system to assess our ethical obligations toward future people, the next question is, which version of it is most suitable? Perhaps utilitarianism would be a good place to start, as it’s virtually synonymous with consequentialist ethics. I would like to suggest that a certain species of utilitarianism, known as ‘painism’, is the best candidate here.

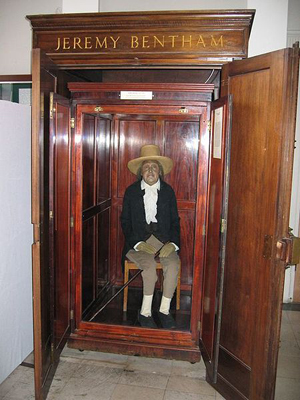

What Jeremy Bentham left for future generations: his corpse on display at his old college.

Let me sketch what I mean by ‘utilitarianism’ and ‘painism’. ‘Utilitarianism’ in the classical sense refers to the brand of consequentialist ethics advanced by Jeremy Bentham (1748-1832), and his godson, John Stuart Mill (1806-1873). Bentham once summarized our moral goal as ‘the greatest happiness of the greatest number’. In other words, utilitarianism claims that an action is morally warranted if it adds to the overall amount of happiness in the world, by maximizing pleasure, minimizing pain, or both. This overall amount must take into account not only the pleasure or pain felt by a given individual, but how many people are feeling how much pleasure or pain. Thus, a utilitarian thinker might find the prolonged torture of a single person justifiable if it brings happiness to a great many people: Although the pleasure each person derives from the torture is small, the aggregate of their happiness exceeds the amount of intense pain one person being tortured feels.

Since utilitarianism can in theory lead to situations like the torture scenario, which seems to advocate the perpetration of injustices for the sake of mass advantage, some utilitarian thinkers have sought to fine-tune Bentham’s initial proposition. A rather compelling attempt to do so comes from Richard D. Ryder. In the essay, ‘Painism Versus Utilitarianism’ (Think, Vol. 8, No. 21, 2009), Ryder explains his ‘painist’ qualification of utilitarianism as follows:

“The pains and pleasures of each sentient individual are calculated, but they cannot be totalled across individuals. So it may be justifiable to cause or tolerate mild pain for one individual in order to reduce the greater pain of another individual, but it’s never permissible to add up the pains or pleasures of several individuals in such calculations. A better way to rate the badness of a situation… is by the quantity of pain experienced by the most affected sufferer. The suffering of each individual really means something, whereas totals of sufferings across individuals are meaningless.”

Painism does not consider the sum total of happiness or pain, then; it focuses instead on the intensity of pain in individuals. This sidesteps unfair scenarios like the torture case. We cannot torture somebody for the mild happiness of many. Instead we compare the intense pain of the tortured to the small pleasure of the single observer, and discover that the pleasure does not outweigh the pain. Painism, then, is a version of utilitarianism that focuses on the individual. But more importantly (as I’ll explain), it’s a formulation that regards minimizing pain rather than maximizing pleasure as the moral goal.

Which of these two systems is better suited for dealing with future persons? Painism scores a point over classical utilitarianism as an ethics of the future by focusing upon minimizing pain rather than maximizing pleasure. In my view, it makes more sense to worry about future people feeling pain from our actions than feeling pleasure from them. I don’t mean to sound pessimistic and I certainly don’t mean that we’re incapable of providing happiness to future generations. Instead, I mean that pain is a more constant, less malleable type of feeling than happiness, so it is easier to predict or at least allow for when planning our current actions. To put this another way, it seems to me that pain-causing things do not generally change much from generation to generation, whereas pleasure-causing things change a lot. Think, for instance, of some of the things that make you happy: they might include your favorite movie, book, song, or TV program. Now ask yourself, will any of these things necessarily make later generations happy? My own students do not care much for my musical tastes. Now consider some of the things that make you unhappy or that cause you pain. Perhaps the list includes poverty, illness, war, famine, disaster… I’m inclined to believe that these things would also cause pain to people much younger than myself, as well as to those who do not yet exist. Therefore, if we want to do good by future generations, it would be more advantageous for us to focus upon minimizing their pain, because we’re more likely to succeed in that regard, than in our positive attempts to make them happy.

Another way that painism seems superior is that Benthamite utilitarianism seems as if it can only properly process existing subjects. Its basic premise is that we should maximize pleasure; but pleasure is a feeling, and can only exist in a mind. Strictly, we cannot talk about the happiness of future individuals in this formulation, because they do not exist, and therefore are not feeling anything. Moreover, we don’t know anything about what future individuals might feel, or even if they will exist, or how many of them. So when Bentham decrees that we must maximize happiness, he’s telling us to maximize happiness for currently existing persons, or at least for persons we know will exist whose happiness we can be reasonably sure of factoring in accurately. Consequently, classical utilitarianism does not seem well-equipped to accommodate merely possible persons.

Painism avoids this pitfall. At first glance, it seems as if painism would fall into the same trap of needing to talk about actual, knowable feelings of pain and pleasure, and so not being able to calculate for the future. Yet painism’s unique formulation bases its moral judgments not only on actual feelings, but also upon potential feelings, so it’s already talking about potential states of mind. To see this, let’s take a look at Ryder’s more concise formulation of painism:

1) A feels pain a.

2) B feels pain b.

3) But no-one feels a+b.

Therefore a+b is meaningless.

The third premise is key here. It contains two different assumptions. The first is that nobody actually feels both the pain of A and the pain of B at the same time. The second assumption (and most crucial for our purposes) is that nobody could feel the pains of A and B at the same time. So with painism we’re not speaking solely of actual feelings, we’re also thinking about potential feelings. But if we’re already making allowances for potentially-felt emotions, we can relatively easily move on to discussing not-yet-existent people, as their feelings are all (and only) potentially felt.

Photo by Alfred Schrock on Unsplash

No Pain(ism), No Gain

Constructing an ethics that encompasses future people might be a difficult task. I have argued that the best way to tackle it is using a consequentialist framework. I have also attempted to argue that this approach is most likely to succeed if we focus on the pain our actions will cause future people. The implication is that we should minimize the pain our actions will inflict upon future people. Perhaps a good starting point for applying this theory, then, is to try our best not to destroy the planet. We would spare future generations quite a bit of pain if we don’t leave them a barren wasteland.

© Alexander B. Joy 2019

Alexander B. Joy holds a PhD in Comparative Literature from the University of Massachusetts Amherst.